What is entropy? Entropy - what is it: explanation of the term in simple words

Read also

Introduction 4

Entropy concept 5

Entropy Measurement 8

Concepts and examples of entropy increase 9

Conclusion 13

References 14

Introduction

Natural science is a branch of science based on reproducible empirical testing of hypotheses and the creation of theories or empirical generalizations that describe natural phenomena.

The subject of natural science is facts and phenomena perceived by our senses. The scientist’s task is to generalize these facts and create a theoretical model of the natural phenomenon being studied, including the laws governing it. Phenomena, for example, the law of universal gravitation, are given to us in experience; one of the laws of science - the law of universal gravitation, represents options for explaining these phenomena. Facts, once established, always remain relevant; laws can be revised or adjusted in accordance with new data or a new concept that explains them. Facts of reality are a necessary component of scientific research.

The basic principle of natural science states 1: knowledge about nature must be capable of empirical verification. This doesn't mean that scientific theory must be immediately confirmed, but each of its provisions must be such that such verification is possible in principle.

What distinguishes natural science from technical sciences is that it is primarily aimed not at transforming the world, but at understanding it. What distinguishes natural science from mathematics is that it studies natural rather than sign systems. Let’s try to connect natural science, technical and mathematical sciences using the concept of “entropy”.

Thus, the purpose of this work is to consider and solve the following problems:

The concept of entropy;

Entropy measurement;

Concepts and examples of increasing entropy.

The concept of entropy

The concept of entropy was introduced by R. Clausius 2, who formulated the second law of thermodynamics, according to which the transition of heat from a colder body to a warmer one cannot occur without the expenditure of external work.

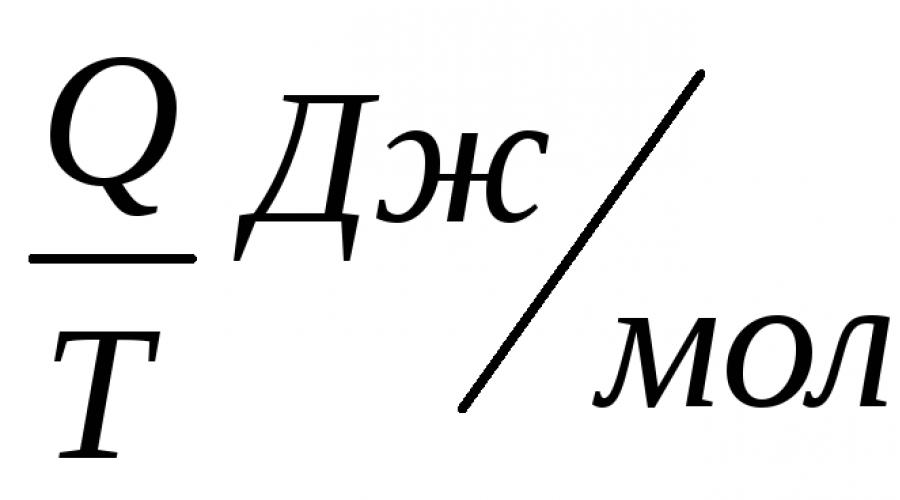

He defined the change in entropy of a thermodynamic system during a reversible process as the ratio of the change in the total amount of heat ΔQ to the absolute temperature T:

Rudolf Clausius gave the quantity S the name "entropy", which comes from the Greek word τρoπή, "change" (change, transformation, transformation).

This formula is only applicable for an isothermal process (occurring at a constant temperature). Its generalization to the case of an arbitrary quasi-static process looks like this:

where dS is the increment (differential) of entropy, and δQ is the infinitesimal increment of the amount of heat.

Note that entropy is a function of state, so on the left side of the equality there is its total differential. On the contrary, the amount of heat is a function of the process in which this heat was transferred, so δQ can in no case be considered a total differential.

Entropy is thus defined up to an arbitrary additive constant. The third law of thermodynamics allows us to determine it precisely: in this case, the entropy of an equilibrium system at absolute zero temperature is considered equal to zero.

Entropy is a quantitative measure of that heat that does not turn into work.

S 2 -S 1 =ΔS=

Or, in other words, entropy is a measure of dispersion free energy. But we already know that any open thermodynamic system in a stationary state tends to minimize the dissipation of free energy. Therefore, if due to reasons the system has deviated from the stationary state, then due to the system’s desire for minimum entropy, internal changes arise in it, returning it to the stationary state.

As can be seen from what was written above, entropy characterizes a certain direction of the process in closed system. In accordance with the second law of thermodynamics 3, an increase in entropy corresponds to the direction of heat flow from a hotter body to a less hot one. A continuous increase in entropy in a closed system occurs until the temperature is equalized throughout the entire volume of the system. As they say, thermodynamic equilibrium of the system occurs, at which directional heat flows and the system becomes homogeneous.

The absolute value of entropy depends on a number of physical parameters. At a fixed volume, entropy increases with increasing temperature of the system, and at a fixed temperature, it increases with increasing volume and decreasing pressure. Heating of the system is accompanied by phase transformations and a decrease in the degree of order of the system, since solid turns into a liquid, and the liquid turns into a gas. When a substance is cooled, the reverse process occurs and the order of the system increases. This ordering is manifested in the fact that the molecules of a substance occupy an increasingly definite position relative to each other. In a solid, their position is fixed by the structure of the crystal lattice.

In other words, entropy is a measure of chaos 4 (the definition of which has been debated for a long time).

All processes in nature proceed in the direction of increasing entropy. The thermodynamic equilibrium of a system corresponds to a state with maximum entropy. The equilibrium that corresponds to the maximum entropy is called absolutely stable. Thus, an increase in the entropy of a system means a transition to a state that has a high probability. That is, entropy characterizes the probability with which a particular state is established and is a measure of chaos or irreversibility. It is a measure of the chaos in the arrangement of atoms, photons, electrons and other particles. The more order, the less entropy. The more information enters the system, the more organized the system is, and the lower its entropy:

(According to Shannon's theory 5)

Entropy (from ancient Greek ἐντροπία “turn”, “transformation”) - widely used in natural and exact sciences term. It was first introduced within the framework of thermodynamics as a function of the state of a thermodynamic system, which determines the measure of irreversible energy dissipation. In statistical physics, entropy characterizes the probability of the occurrence of any macroscopic state. In addition to physics, the term is widely used in mathematics: information theory and mathematical statistics.

This concept entered science back in the 19th century. Initially it was applicable to the theory of heat engines, but quickly appeared in other areas of physics, especially in the theory of radiation. Very soon, entropy began to be used in cosmology, biology, and information theory. Various areas knowledge is highlighted different types measures of chaos:

- informational;

- thermodynamic;

- differential;

- cultural, etc.

For example, for molecular systems there is Boltzmann entropy, which determines the measure of their chaos and homogeneity. Boltzmann was able to establish a relationship between the measure of chaos and the probability of a state. For thermodynamics this concept considered a measure of irreversible energy dissipation. It is a function of the state of the thermodynamic system. In an isolated system, entropy grows to maximum values, and they eventually become a state of equilibrium. Information entropy implies some measure of uncertainty or unpredictability.

Entropy can be interpreted as a measure of uncertainty (disorder) of some system, for example, some experience (test), which can have different outcomes, and therefore the amount of information. Thus, another interpretation of entropy is the information capacity of the system. Associated with this interpretation is the fact that the creator of the concept of entropy in information theory (Claude Shannon) initially wanted to call this quantity information.

For reversible (equilibrium) processes, the following mathematical equality is satisfied (a consequence of the so-called Clausius equality), where is the supplied heat, is the temperature, and are the states, and is the entropy corresponding to these states (here the process of transition from state to state is considered).

For irreversible processes, the inequality following from the so-called Clausius inequality is satisfied, where is the heat supplied, is the temperature, and are the states, and is the entropy corresponding to these states.

Therefore, the entropy of an adiabatically isolated (no heat supply or removal) system can only increase during irreversible processes.

Using the concept of entropy, Clausius (1876) gave the most general formulation of the 2nd law of thermodynamics: in real (irreversible) adiabatic processes, entropy increases, reaching a maximum value in a state of equilibrium (the 2nd law of thermodynamics is not absolute, it is violated during fluctuations).

Absolute entropy (S) of a substance or process is the change in available energy for heat transfer at a given temperature (Btu/R, J/K). Mathematically, entropy equals heat transfer divided by absolute temperature, at which the process occurs. Therefore, transmission processes large quantity heat increases entropy more. Also, entropy changes will increase when heat is transferred at low temperatures. Since absolute entropy concerns the fitness of all the energy in the universe, temperature is usually measured in absolute units (R, K).

Specific entropy(S) is measured relative to a unit mass of a substance. Temperature units that are used in calculating entropy differences of states are often given with temperature units in degrees Fahrenheit or Celsius. Since the differences in degrees between the Fahrenheit and Rankine or Celsius and Kelvin scales are equal, the solution to such equations will be correct regardless of whether entropy is expressed in absolute or conventional units. Entropy has the same given temperature as the given enthalpy of a certain substance.

To summarize: entropy increases, therefore, with any of our actions we increase chaos.

Just something complicated

Entropy is a measure of disorder (and a characteristic of state). Visually, the more evenly things are distributed in a certain space, the greater the entropy. If sugar lies in a glass of tea in the form of a piece, the entropy of this state is small, if it is dissolved and distributed throughout the entire volume, it is high. Disorder can be measured, for example, by counting how many ways objects can be arranged in a given space (the entropy is then proportional to the logarithm of the number of arrangements). If all the socks are folded extremely compactly in one stack on a shelf in the closet, the number of layout options is small and comes down only to the number of rearrangements of the socks in the stack. If socks can be in any place in the room, then there is an inconceivable number of ways to lay them out, and these layouts are not repeated throughout our lives, just like the shapes of snowflakes. The entropy of the “socks scattered” state is enormous.

The second law of thermodynamics states that entropy cannot decrease spontaneously in a closed system (usually it increases). Under its influence, smoke dissipates, sugar dissolves, stones and socks crumble over time. This tendency is explained simply: things move (moved by us or by the forces of nature) usually under the influence of random impulses that have no common goal. If the impulses are random, everything will move from order to disorder, because there are always more ways to achieve disorder. Imagine a chessboard: the king can leave the corner in three ways, all possible paths for him lead from the corner, and come back to the corner from each adjacent cell in only one way, and this move will be only one of 5 or of 8 possible moves. If you deprive him of a goal and allow him to move randomly, he will eventually be equally likely to end up anywhere on the chessboard, the entropy will become higher.

In a gas or liquid, the role of such a disordering force is played by thermal movement, in your room - your momentary desires to go here, there, lie around, work, etc. It doesn’t matter what these desires are, the main thing is that they are not related to cleaning and are not related to each other. To reduce entropy, you need to expose the system to external influences and do work on it. For example, according to the second law, the entropy in the room will continuously increase until your mother comes in and asks you to tidy up a little. The need to do work also means that any system will resist reducing entropy and establishing order. It’s the same story in the Universe – entropy began to increase with Big Bang, and it will continue to grow until Mom comes.

Measure of chaos in the Universe

Cannot be applied to the Universe classic version entropy calculations, because gravitational forces are active in it, and matter by itself cannot form a closed system. In fact, for the Universe it is a measure of chaos.

The main and largest source of disorder that is observed in our world are considered to be well-known massive formations - black holes, massive and supermassive.

Attempts to accurately calculate the value of the measure of chaos cannot yet be called successful, although they occur constantly. But all estimates of the entropy of the Universe have a significant scatter in the obtained values - from one to three orders of magnitude. This is due not only to a lack of knowledge. There is a lack of information about the influence on calculations of not only all known celestial objects, but also dark energy. The study of its properties and features is still in its infancy, but its influence can be decisive. The measure of chaos in the Universe is changing all the time. Scientists are constantly conducting certain studies to be able to determine general patterns. Then it will be possible to make fairly accurate predictions of the existence of various space objects.

Heat Death of the Universe

Any closed thermodynamic system has a final state. The Universe is also no exception. When the directed exchange of all types of energies stops, they will be reborn into thermal energy. The system will go into a state of thermal death if the thermodynamic entropy reaches the highest value. The conclusion about this end of our world was formulated by R. Clausius in 1865. He took the second law of thermodynamics as a basis. According to this law, a system that does not exchange energies with other systems will seek an equilibrium state. And it may well have parameters characteristic of the thermal death of the Universe. But Clausius did not take into account the influence of gravity. That is, for the Universe, unlike an ideal gas system, where particles are distributed uniformly in some volume, the homogeneity of particles cannot correspond to the of great importance entropy. And yet, it is not entirely clear whether entropy is an acceptable measure of chaos or the death of the Universe?

Entropy in our lives

In defiance of the second law of thermodynamics, according to the provisions of which everything should develop from complex to simple, the development of earthly evolution is moving in the opposite direction. This inconsistency is due to the thermodynamics of processes that are irreversible. Consumption by a living organism, if we imagine it as an open thermodynamic system, occurs in smaller volumes than is ejected from it.

Nutrients have less entropy than the excretory products produced from them. That is, the organism is alive because it can throw out this measure of chaos, which is produced in it due to the occurrence of irreversible processes. For example, about 170 g of water is removed from the body by evaporation, i.e. the human body compensates for the decrease in entropy by certain chemical and physical processes.

Entropy is a certain measure of the free state of a system. It is the more complete the fewer restrictions this system has, but provided that it has many degrees of freedom. It turns out that the zero value of the chaos measure is full information, and the maximum is absolute ignorance.

Our whole life is pure entropy, because the measure of chaos sometimes exceeds the measure of common sense. Perhaps the time is not so far away when we come to the second law of thermodynamics, because sometimes it seems that the development of some people, and even entire states, has already gone backwards, that is, from the complex to the primitive.

conclusions

Entropy is a designation of the function of the state of a physical system, the increase of which is carried out due to the reversible (reversible) supply of heat to the system;

magnitude internal energy, which cannot be converted into mechanical work;

the exact determination of entropy is made through mathematical calculations, with the help of which the corresponding state parameter (thermodynamic property) of the associated energy is established for each system. Entropy manifests itself most clearly in thermodynamic processes, where processes are distinguished, reversible and irreversible, and in the first case, entropy remains unchanged, and in the second it constantly grows, and this increase is due to a decrease mechanical energy.

Consequently, all the many irreversible processes that occur in nature are accompanied by a decrease in mechanical energy, which ultimately should lead to a stop, to “thermal death.” But this cannot happen, since from the point of view of cosmology it is impossible to fully complete the empirical knowledge of the entire “integrity of the Universe”, on the basis of which our idea of entropy could find reasonable application. Christian theologians believe that, based on entropy, one can conclude that the world is finite and use it to prove the “existence of God.” In cybernetics the word "entropy" is used in a sense different from its direct meaning, which can only be formally derived from the classical concept; it means: average fullness of information; unreliability regarding the value of “expecting” information.

Quite quickly you will realize that you won’t succeed, but don’t be discouraged: you didn’t solve the Rubik’s cube, but you illustrated the second law of thermodynamics:

The entropy of an isolated system cannot decrease.

The heroine of Woody Allen's film Whatever Works gives the following definition of entropy: it's what makes it hard to put back in the tube toothpaste. She also explains the Heisenberg Uncertainty Principle in an interesting way, another reason to watch the movie.

Entropy is a measure of disorder, chaos. You invited friends to new year party, cleaned up, washed the floor, laid out snacks on the table, set out drinks. In short, they put everything in order and eliminated as much chaos as they could. This is a system with low entropy.

You all can probably imagine what happens to the apartment if the party is a success: complete chaos. But in the morning you have at your disposal a system with high entropy.

In order to put the apartment in order, you need to tidy up, which means spending a lot of energy on it. The entropy of the system has decreased, but there is no contradiction with the second law of thermodynamics - you added energy from the outside, and this system is no longer isolated.

Uneven fight

One of the options for the end of the world is the thermal death of the Universe due to the second law of thermodynamics. The entropy of the universe will reach its maximum and nothing else will happen in it.

In general, everything sounds rather depressing: in nature, all ordered things tend to destruction, to chaos. But where does life come from on Earth then? All living organisms are incredibly complex and orderly, and somehow spend their entire lives fighting entropy (although it always wins in the end).

Everything is very simple. Living organisms in the process of life redistribute entropy around themselves, that is, they give their entropy to everything they can. For example, when we eat a sandwich, we turn beautiful, ordered bread and butter into something known. It turns out that we gave our entropy to the sandwich, and in common system entropy has not decreased.

And if we take the Earth as a whole, then it is not a closed system at all: the Sun supplies us with energy to fight entropy.

The most important parameter of the state of matter is entropy (S). The change in entropy in a reversible thermodynamic process is determined by the equation, which is an analytical expression of the second law of thermodynamics:

for 1 kg of substance - d s = d q / T, where d q is an infinitesimal amount of heat supplied or removed in an elementary process at temperature T, kJ / kg.

Entropy was first noticed by Clausius (1876). Having discovered a new quantity in nature, previously unknown to anyone, Clausius called it a strange and incomprehensible word “entropy”, which he himself invented. He explained its meaning as follows: "trope" in Greek means "transformation." To this root Clausius added two letters - “en”, so that the resulting word would be as similar as possible to the word “energy”. Both quantities are so close to each other in their physical significance that a certain similarity in their names was appropriate.

Entropy is a derived concept from the concept of “state of an object” or “phase space of an object.” It characterizes the degree of variability of the microstate of an object. Qualitatively, the higher the entropy, the greater the number of significantly different microstates an object can be in for a given macrostate.

You can give another definition, not so strict and precise, but more visual: ENTROPY is a measure of depreciated energy, useless energy that cannot be used to produce work, or

WARMTH is the queen of the world, ENTROPY is her shadow.

All real processes occurring in reality are irreversible. They cannot be carried out at will in forward and backward directions without leaving any trace in the surrounding world. Thermodynamics should help researchers know in advance whether real process without actually implementing it. This is why the concept of “entropy” is needed.

Entropy is a property of a system that is completely determined by the state of the system. No matter how the system moves from one state to another, the change in its entropy will always be the same.

It is generally impossible to calculate the entropy of a system or any body, just as it is impossible to determine its energy at all. It is possible to calculate only the change in entropy during the transition of a system from one state to another, if this transition is carried out in a quasi-static way.

There is no special name for the units in which entropy is measured. It is measured in J/kg*degree.

Clausius equation:

ΔS = S 2 – S 1 = ∑(Q/T) reversible

The change in entropy during the transition of a system from one state to another is exactly equal to the sum of the reduced heats.

Entropy is a measure of statistical disorder in a closed thermodynamic system. The more order, the less entropy. And vice versa, the less order, the greater the entropy.

All spontaneously occurring processes in a closed system, bringing the system closer to a state of equilibrium and accompanied by an increase in entropy, are directed towards increasing the probability of the state ( Boltzmann).

Entropy is a function of state, therefore its change in a thermodynamic process is determined only by the initial and final values of the state parameters.

The change in entropy in basic thermodynamic processes is determined by:

In isochoric DS v = С v ln Т 2 /Т 1

In isobaric DS р = С р ln Т 2 /Т 1

In isothermal DS t = R ln P 1 / P 2 = R ln V 2 / V 1

Entropy is a measure of the complexity of a system. Not disorder, but complication and development. The greater the entropy, the more difficult it is to understand the logic of this particular system, situation, phenomenon. It is generally accepted that the more time passes, the less ordered the Universe becomes. The reason for this is the uneven rate of development of the Universe as a whole and of us, as observers of entropy. We, as observers, are many orders of magnitude simpler than the Universe. Therefore, it seems to us excessively redundant; we are not able to understand most of the cause-and-effect relationships that make up it. Important and psychological aspect- people find it difficult to get used to the fact that they are not unique. Understand that the thesis that people are the crown of evolution is not far removed from the earlier conviction that the Earth is the center of the universe. It is pleasant for a person to believe in his exclusivity and it is not surprising that we tend to see structures that are more complex than us as disordered and chaotic.

There are very good answers above that explain entropy based on modern scientific paradigm. On simple examples The respondents explain this phenomenon. Socks scattered around the room, broken glasses, monkeys playing chess, etc. But if you look closely, you understand that order here is expressed in a truly human concept. The word “better” applies to a good half of these examples. Better socks folded in the closet than socks scattered on the floor. A whole glass is better than a broken glass. A notebook written in beautiful handwriting is better than a notebook with blots. In human logic it is not clear what to do with entropy. The smoke coming out of the pipe is not utilitarian. A book torn into small pieces is useless. It is difficult to extract at least a minimum of information from the polyphonic chatter and noise in the subway. In this sense, it will be very interesting to return to the definition of entropy introduced by the physicist and mathematician Rudolf Clausius, who saw this phenomenon as a measure of the irreversible dissipation of energy. From whom does this energy go? Who finds it more difficult to use it? Yes to the man! It is very difficult (if not impossible) to collect spilled water, every drop, into a glass again. To fix old clothes, you need to use new material (fabric, thread, etc.). This does not take into account the meaning that this entropy may carry not for people. I will give an example when the dissipation of energy for us will carry exactly the opposite meaning for another system:

You know that every second a huge amount of information from our planet flies into space. For example, in the form of radio waves. For us, this information seems completely lost. But if in the path of radio waves there is a sufficiently developed alien civilization, its representatives can accept and decipher part of this energy lost to us. Hear and understand our voices, see our television and radio programs, connect to our Internet traffic))). In this case, our entropy can be regulated by other intelligent beings. And the more energy dissipated for us, the more energy they can collect.